Introduction

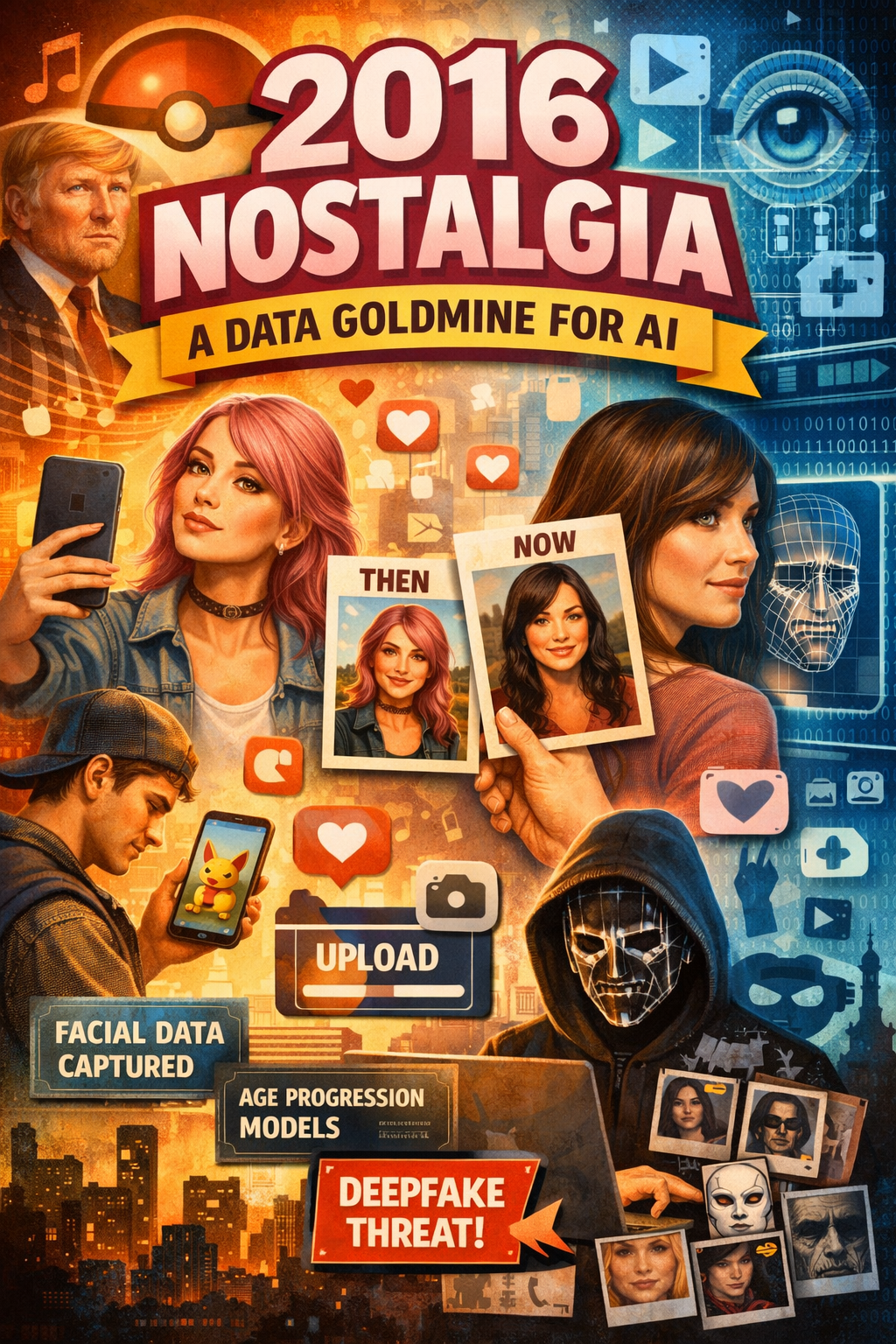

A viral social media trend inviting users to repost their photos from 2016 has sparked widespread nostalgia across platforms like Instagram and X. While many participants view it as a harmless walk down memory lane, cybersecurity and AI experts warn that the trend is quietly generating extremely valuable training data for artificial intelligence systems.

Background and Context

The year 2016 feels deceptively recent yet culturally distant. It was the era when Justin Bieber topped charts with “Love Yourself,” Pokémon Go reshaped mobile gaming, and Donald Trump won his first presidential election.

The “2016 again” trend capitalizes on this nostalgia, with users posting decade-old photos alongside reflections on simpler times. However, critics argue that beneath the sentimental storytelling lies a large-scale, voluntary data collection event.

Why AI Companies Value These Images

Jonathan Drake Steele, founder of cybersecurity consulting firm Steele Fortress LLC, describes the trend as a “data goldmine” for AI developers. One of the most persistent challenges in AI training is acquiring reliable temporal data, meaning images of the same individual across different points in time.

By sharing old and current photos on verified personal profiles, users are unintentionally providing matched datasets spanning eight to ten years. These datasets include facial geometry, lighting variations, background environments, and contextual metadata. Such information is critical for improving age-progression models, facial recognition systems, and synthetic media generation.

Deepfakes and the Expanding Risk Surface

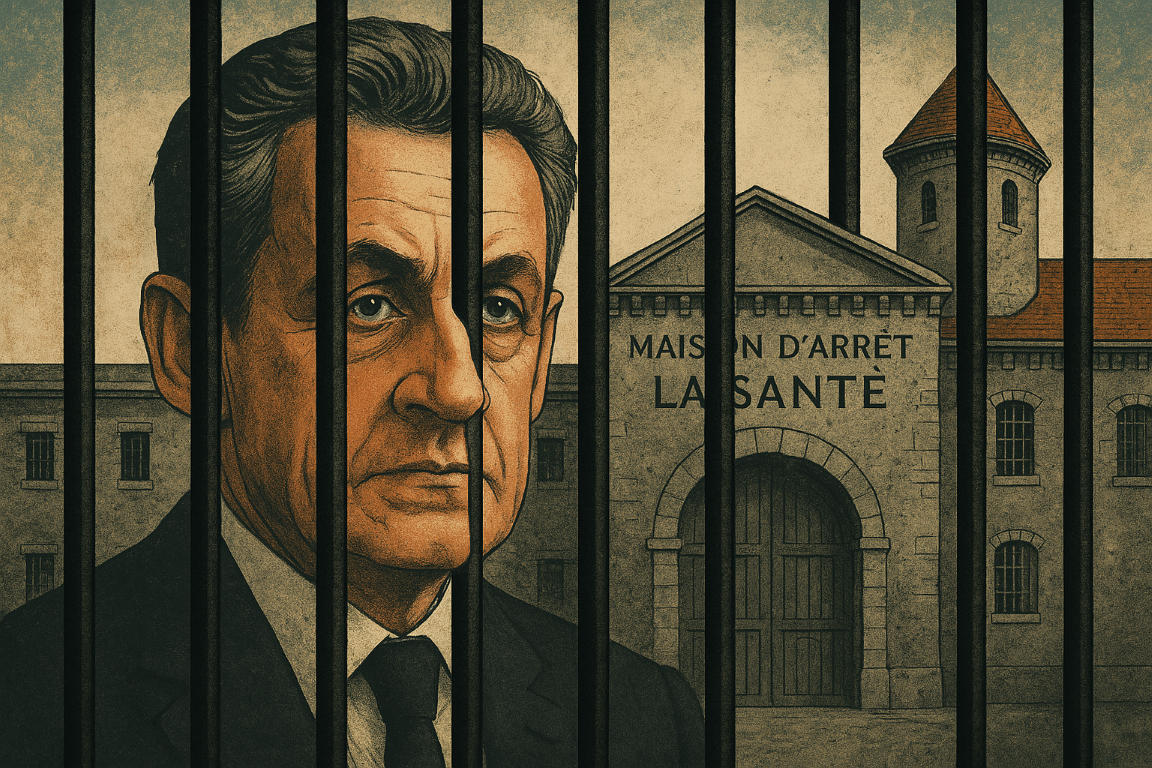

The growing sophistication of deepfakes has intensified concerns around trends like this. Facial aging data enhances the realism of synthetic identities, which are increasingly used in scams, impersonation, revenge pornography, and harassment campaigns.

The issue has gained enough prominence that the US Senate recently passed the DEFIANCE Act. If enacted, the law would allow victims of non-consensual sexualized deepfakes to pursue civil legal action. Despite legislative efforts, recent controversies involving AI-generated explicit images on major platforms demonstrate how difficult it is to fully contain misuse.

Clean Data in a Synthetic Internet

Aatif Belal, an applied AI manager at Deloitte, notes that older photos are not inherently superior but are increasingly valuable due to their authenticity. Images from 2016 predate the explosion of AI-generated content that accelerated after the release of ChatGPT in 2022.

As synthetic imagery floods the internet, genuine human-captured photos from earlier periods serve as cleaner reference data. They reflect natural fashion, environments, and social behavior that are less prevalent in today’s highly curated and AI-influenced content ecosystem.

Why Nostalgia Resonates Now

The popularity of the trend also reflects broader social and psychological dynamics. According to digital marketing strategist Michael Smith of Buyergain LLC, nostalgia often surges during periods of uncertainty. Compared to today, 2016 is remembered as a time before the COVID-19 pandemic, major geopolitical conflicts, and persistent economic anxiety.

Psychological studies suggest nostalgia can temporarily boost optimism, self-esteem, and perceived meaning in life. With a majority of Americans reporting stress about the nation’s future, it is unsurprising that public figures such as Kim Kardashian and Meghan Markle have also participated in the trend.

Expert Commentary

While participation in nostalgic trends may feel harmless, cybersecurity experts stress the importance of digital awareness. Images shared publicly can be scraped, archived, and repurposed long after the trend fades. Once biometric data enters the AI training pipeline, control over its future use is effectively lost.

Outlook

As AI systems become more reliant on historical, human-authored data, similar trends are likely to emerge and be quietly leveraged for model training. Without stronger transparency and data governance from major platforms, users will continue to trade privacy for participation without fully understanding the implications.

Sources

- Cybernews – Coverage on social media trends and AI data usage

https://cybernews.com/security/social-media-trends-ai-data-training/ - Deloitte Insights – AI ethics, data quality, and synthetic data challenges

https://www.deloitte.com/global/en/insights/focus/cognitive-technologies/artificial-intelligence-data-ethics.html - Wired – How facial data and aging datasets are used in AI systems

https://www.wired.com/story/facial-recognition-age-progression-ai/ - MIT Technology Review – The growing problem of deepfakes and biometric misuse

https://www.technologyreview.com/2023/05/10/deepfakes-ai-risk/ - U.S. Congress – DEFIANCE Act legislative summary

https://www.congress.gov/bill/118th-congress/senate-bill/3696 - Pew Research Center – Public stress, nostalgia, and social media behavior

https://www.pewresearch.org/social-media/