Introduction

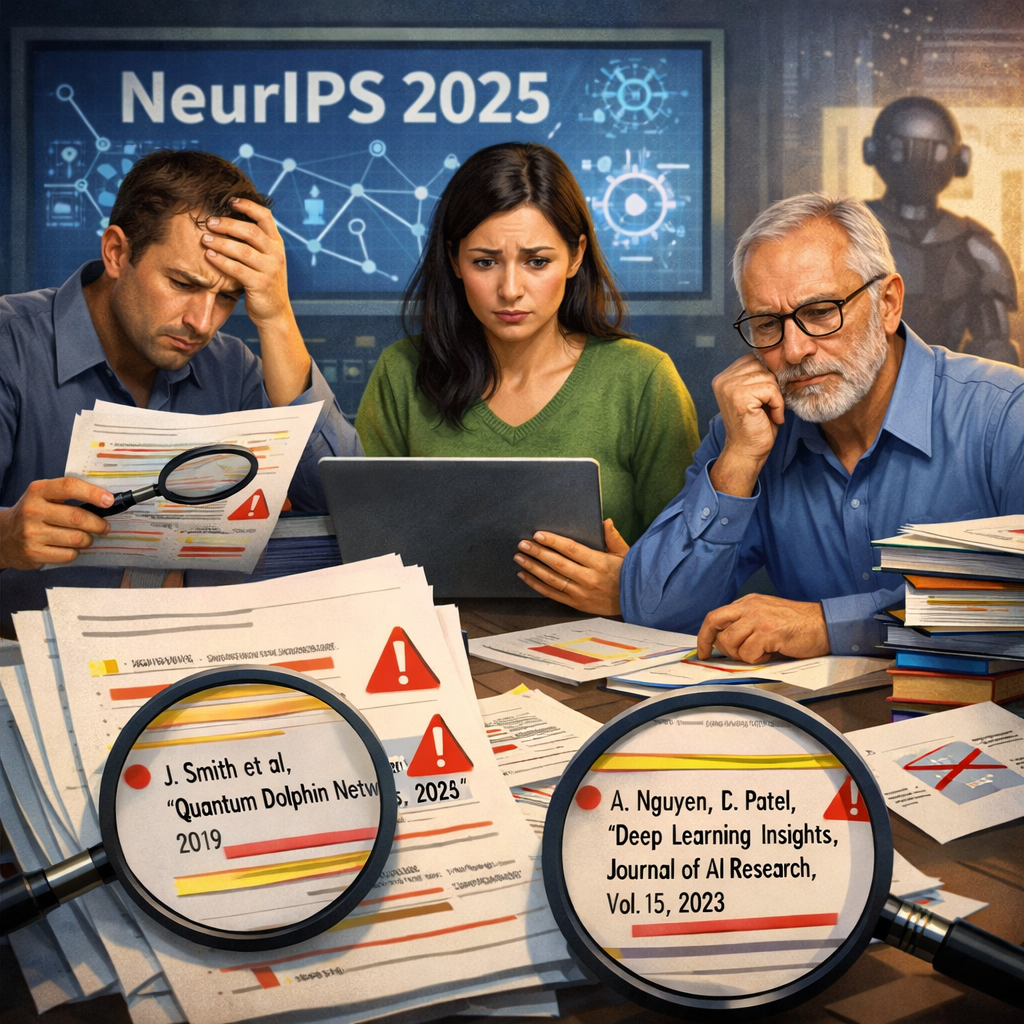

A new large-scale investigation by GPTZero has revealed a growing integrity challenge within top-tier AI research venues. After analyzing thousands of accepted papers, the company confirmed that more than 100 hallucinated citations appeared in papers published at NeurIPS 2025, one of the world’s most prestigious artificial intelligence conferences.

The findings underscore how generative AI tools, when misused or insufficiently reviewed, can silently undermine academic rigor at scale.

Background and Context

NeurIPS, alongside conferences such as ICLR, ICML, and AAAI, sits at the core of global AI research. However, explosive growth in submissions has placed unprecedented strain on peer review systems.

Between 2020 and 2025, NeurIPS submissions reportedly surged by over 220 percent. This rapid expansion, combined with increased reliance on AI-assisted writing tools, has created fertile ground for undetected citation errors and fabricated references.

Key Findings from the Investigation

GPTZero analyzed 4,841 papers accepted to NeurIPS 2025 and identified:

- Over 100 confirmed hallucinated citations across more than 50 published papers

- Fabricated authors, non-existent paper titles, and invalid DOIs and URLs

- “Vibe citing,” where AI blends elements of real publications into convincing but false references

- Multiple cases where hallucinated citations passed review by three or more expert reviewers

These papers were not merely submissions. They were accepted, presented, and effectively entered the permanent scientific record.

What Are Hallucinated Citations and Vibe Citing?

GPTZero defines hallucinated citations as references that cannot be verified in any credible academic database and exhibit patterns typical of large language model generation.

A related phenomenon, termed vibe citing, describes citations that appear legitimate at a glance but collapse under scrutiny. Common indicators include:

- Paraphrased or hybridized titles drawn from unrelated papers

- Fabricated or partially altered author lists

- Invented publication venues or mismatched metadata

While minor citation errors are common in human writing, these systematic patterns are strongly associated with AI-generated text.

Scale of the Peer Review Challenge

Despite NeurIPS maintaining an acceptance rate of approximately 24.5 percent, the investigation found that dozens of papers containing hallucinated citations outperformed more than 15,000 rejected submissions.

According to NeurIPS policy, fabricated citations are grounds for rejection or post-publication action. The fact that so many passed review highlights the limits of manual verification under current workloads.

Response and Mitigation

GPTZero positions its Hallucination Check tool as a safeguard at multiple stages of the publication pipeline:

- For authors: Pre-submission validation of references

- For reviewers: Rapid identification of suspicious citations

- For editors and organizers: Combined detection of AI-generated text and fabricated sources

Following earlier findings related to ICLR 2026 submissions, GPTZero has reportedly begun coordinating with conference organizers to improve citation screening in future review cycles.

Expert Commentary and Industry Impact

The investigation does not single out reviewers or organizers for blame. Instead, it highlights a systemic vulnerability where human-centric review processes are being overwhelmed by AI-accelerated content production.

As generative models become more capable, the risk is not overt plagiarism, but subtle, convincing inaccuracies that erode trust in published research.

Outlook

If left unaddressed, hallucinated citations could compromise literature reviews, misdirect future research, and weaken confidence in academic publishing. The NeurIPS findings may serve as a turning point, accelerating adoption of automated verification tools alongside traditional peer review.

The broader lesson is clear: as AI reshapes research workflows, integrity safeguards must evolve just as quickly.

Credits and Source Attribution

Original Investigation and Reporting:

Nazar Shmatko, Alex Adam, Paul Esau

Published by: GPTZero Investigations Team

Date: January 21, 2026

This article is based on publicly available investigative content authored by the individuals named above, with full credit retained for the original reporting and analysis.