Introduction

India has formally articulated its long-term vision for governing artificial intelligence with the release of the White Paper “Strengthening AI Governance Through Techno-Legal Framework” by the Office of the Principal Scientific Adviser to the Government of India. The document marks a significant evolution in India’s AI policy thinking, moving beyond standalone regulation toward a governance-by-design model that integrates legal obligations, technical controls, and institutional oversight across the entire AI lifecycle .

At a time when AI systems are becoming autonomous, adaptive, and globally deployed, the White Paper positions India as a proponent of a pragmatic, innovation-aligned governance framework that prioritizes trust, accountability, and societal protection without slowing technological progress.

Background and Policy Context

Conventional regulatory approaches were designed for static, predictable technologies. Artificial intelligence, by contrast, is dynamic, opaque, and capable of learning and evolving after deployment. These characteristics expose regulatory gaps related to privacy breaches, algorithmic bias, deepfakes, security vulnerabilities, and unclear accountability.

India’s AI governance landscape currently relies on a combination of baseline laws such as the Information Technology Act 2000, the Digital Personal Data Protection Act 2023, sector-specific regulations issued by bodies like RBI and SEBI, and policy instruments including the India AI Governance Guidelines 2025. While these instruments provide foundational safeguards, they are largely reactive and not purpose-built for AI systems operating at population scale .

The White Paper argues that India requires a governance approach tailored to its unique socio-economic diversity, digital public infrastructure, and innovation-driven growth model, rather than importing external regulatory templates.

What Is the Techno-Legal Approach

The techno-legal approach is defined as the integration of legal instruments, regulatory rules, and oversight mechanisms directly into the technical architecture of AI systems. Governance is not treated as an external compliance obligation applied after deployment, but as an intrinsic design feature embedded throughout development, training, deployment, and operation.

Key objectives of this approach include:

- Proactive risk prevention rather than post-incident enforcement

- Continuous compliance through automated technical controls

- Lifecycle-based governance aligned with real-world AI operations

- Flexibility to adapt safeguards as AI models, data, and risks evolve .

Lifecycle-Based AI Governance Model

A central pillar of the framework is governance across the full AI lifecycle. The White Paper identifies five critical stages, each with distinct risks and mitigation requirements:

- Data Collection and Ingestion

Risks include unlawful data collection, inclusion of biased or malicious datasets, intellectual property violations, and data poisoning. Controls such as data governance frameworks, consent verification, data classification, and impact assessments are emphasized to prevent downstream harm. - Data-in-Use Protection

This stage focuses on protecting data during training and processing. Privacy-enhancing technologies, access controls, encryption, audit logs, and secure computation environments are highlighted as mandatory safeguards, particularly given the irreversible nature of model training on sensitive data. - AI Training and Model Assessment

Risks such as model memorization, bias amplification, unsafe behavior, and lack of explainability are addressed through red-teaming, benchmarking, fairness and safety scoring, and documented model risk assessments to support transparent and defensible model selection. - Safe AI Inference

During real-world interaction, AI systems face threats such as hallucinations, prompt injection, data leakage, and misuse. Runtime monitoring, responsible AI firewalls, risk tagging, and adversarial attack detection are proposed to ensure controlled and secure inference. - Trusted and Agentic AI Systems

As AI systems gain autonomy, risks escalate. The framework calls for agent identity management, authorization, continuous behavior logging, policy-based orchestration, and kill switches to maintain human oversight and organizational control .

Institutional Governance Architecture

To operationalize the techno-legal framework, the White Paper proposes a coordinated institutional ecosystem:

- AI Governance Group (AIGG): A high-level coordination body chaired by the Principal Scientific Adviser, responsible for harmonizing AI governance across ministries, regulators, and sectors.

- Technology and Policy Expert Committee (TPEC): A multidisciplinary advisory body supporting AIGG with expertise in law, policy, AI safety, cybersecurity, and public administration.

- AI Safety Institute (AISI): A national center for evaluating high-risk AI systems, developing safety tools, conducting compliance reviews, and engaging with global AI safety initiatives.

- National AI Incident Database: A centralized repository to record, classify, and analyze AI-related incidents, enabling evidence-based policy updates and targeted regulatory interventions .

Addressing Emerging and High-Risk Threats

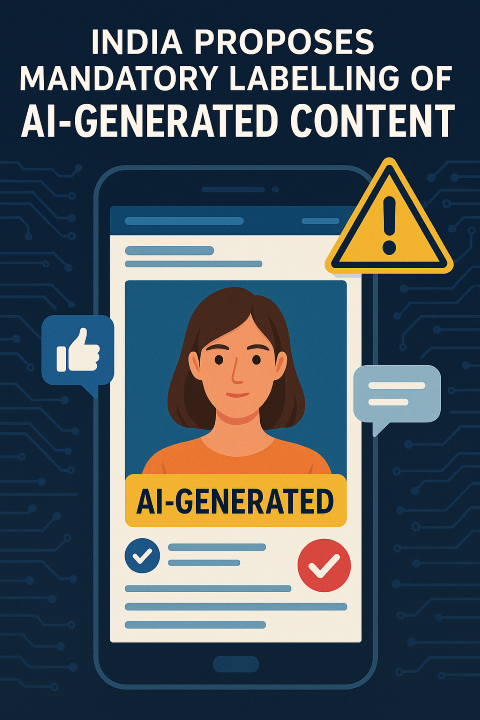

The White Paper devotes particular attention to challenges such as deepfakes and synthetic media, where traditional takedown mechanisms are insufficient. It advocates for content provenance technologies, persistent identifiers, cryptographic metadata, coordinated incident reporting, and infrastructure-level obligations to disrupt deepfake pipelines at scale.

Cross-border AI deployment is another critical concern. The framework emphasizes global alignment on core AI principles such as privacy, security, safety, transparency, accountability, and non-discrimination, supported by techno-legal tools that translate legal obligations into system-level controls capable of operating across jurisdictions.

Role of Digital Public Infrastructure

India’s Digital Public Infrastructure, including consent-based data sharing frameworks and interoperable digital systems, is positioned as a key enabler of scalable techno-legal governance. By integrating governance tools with existing digital infrastructure, compliance costs can be reduced while ensuring inclusion, auditability, and trust at population scale .

Expert Commentary

AI governance is no longer optional or purely regulatory. Embedding governance into technology is presented as essential for sustaining innovation, protecting citizens’ rights, and maintaining public trust. The techno-legal approach reframes AI governance as a strategic enabler rather than a barrier.

Outlook

India’s techno-legal AI governance framework signals a shift toward compliance-by-design and risk-based regulation that aligns innovation with safety. If effectively implemented, it could serve as a global reference model for countries seeking to balance economic growth, technological leadership, and societal protection in the age of artificial intelligence.

The success of this approach will depend on institutional coordination, industry participation, continuous capacity building, and adaptive policy updates as AI technologies evolve.

References / Source Attribution

- Office of the Principal Scientific Adviser to the Government of India, Press Information Bureau White Paper

- Strengthening AI Governance Through Techno-Legal Framework, India’s AI Policy Priorities White Paper Series, January 2026 click to read full Paper